Comprehension SEEDING

(Classroom Response Technology)

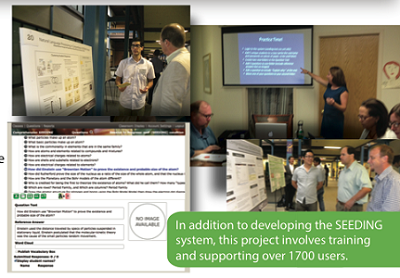

We are conducting research in conjunction with collaborators to help instructors assess student knowledge and skills in real-time (Comprehension SEEDING; Nielsen PI, IES $1.83M 2011-2014 with ASU and UCD). Students submit free text responses to instructors' open-ended questions via mobile devices to an NLP system that clusters the answers and provides the instructor feedback on the types of misconceptions and their frequency, among other things. Unlike clicker technology, students must articulate their understanding of a concept, which has been shown by numerous cognitive science researchers to be a key to deep learning. This research will benefit from aspects of my Ph.D. work, which was the first research to successfully assess elementary students' one- to two-sentence constructed response answers.

We are conducting research in conjunction with collaborators to help instructors assess student knowledge and skills in real-time (Comprehension SEEDING; Nielsen PI, IES $1.83M 2011-2014 with ASU and UCD). Students submit free text responses to instructors' open-ended questions via mobile devices to an NLP system that clusters the answers and provides the instructor feedback on the types of misconceptions and their frequency, among other things. Unlike clicker technology, students must articulate their understanding of a concept, which has been shown by numerous cognitive science researchers to be a key to deep learning. This research will benefit from aspects of my Ph.D. work, which was the first research to successfully assess elementary students' one- to two-sentence constructed response answers.

More Info on the Comprehension SEEDING Project.

Companionbots

(Spoken Dialogue Educational Health and Wellbeing Companion Robots)

The number of people over 65 in the U.S. will more than double in roughly the first quarter of this century. Many of these elderly would prefer to maintain their independence and remain in their homes. Additionally, many suffer from depression. We are conducting research in conjunction with collaborators and are researching means of supporting these seniors via emotive spoken-dialogue companion robots (Companionbots; Nielsen PI, NSF $1.96M total 2011-2015; UNT, CU, DU, UCD Anschutz Medical Campus, and Boulder Language Technologies). The focus of the research is on dialoguing, especially generating and answering questions, in the context of providing education and training related to depression, monitoring participants for signs of physical, mental or emotional deterioration, and being a companion. NLP will capitalize on multimodal input and output, be heavily context dependent and tightly integrated with a user model and history. ML will emphasize co-training on multimodal input and user-assisted semi-supervised learning from massive data sets and data streams. Future work will include massive-scale data mining over the information collected by the Companionbots.

The number of people over 65 in the U.S. will more than double in roughly the first quarter of this century. Many of these elderly would prefer to maintain their independence and remain in their homes. Additionally, many suffer from depression. We are conducting research in conjunction with collaborators and are researching means of supporting these seniors via emotive spoken-dialogue companion robots (Companionbots; Nielsen PI, NSF $1.96M total 2011-2015; UNT, CU, DU, UCD Anschutz Medical Campus, and Boulder Language Technologies). The focus of the research is on dialoguing, especially generating and answering questions, in the context of providing education and training related to depression, monitoring participants for signs of physical, mental or emotional deterioration, and being a companion. NLP will capitalize on multimodal input and output, be heavily context dependent and tightly integrated with a user model and history. ML will emphasize co-training on multimodal input and user-assisted semi-supervised learning from massive data sets and data streams. Future work will include massive-scale data mining over the information collected by the Companionbots.

More Info on the Companionbots Project.

I Spy

(An Interactive Game-Based Approach to Multimodal Robot Learning)

Teaching robots about objects in their environment requires a multimodal correlation of images and linguistic descriptions to build complete feature and object models. These models can be created manually by collecting images and related keywords and presenting the pairings to robots, but doing so is tedious and unnatural. I Spy abstracts the problem of training robots to learn about the world around them by embedding the task in a vision- and dialogue-based game.

Teaching robots about objects in their environment requires a multimodal correlation of images and linguistic descriptions to build complete feature and object models. These models can be created manually by collecting images and related keywords and presenting the pairings to robots, but doing so is tedious and unnatural. I Spy abstracts the problem of training robots to learn about the world around them by embedding the task in a vision- and dialogue-based game.

More Info on the I Spy Project.

Health Informatics

More Info on Health Informatics.